First, as others have mentioned, special thanks to Steve and Jack for hosting the 2009 Spring Experiment. It’s of tremendous benefit to be able to focus on meteorology and consideration of many factors without the distractions of email/phones. Here is a reproduction of portions of a summary I prepared for my forecast staff:

There are considerable issues regarding mesoscale (and potentially stormscale) models being able to reproduce observed convective initiation, morphology, and evolution. Whether it be near-term assistance in high impact decision support services, or long term initiatives such as Warn on Forecast, the future success of improving services beyond detection systems (radar/satellite) depends on the ability of models to portray reality. Current challenges for models include: 1. ability to resolve features, even at high (1 km) resolution; 2. computational limitations; 3. initial conditions and data assimilation; 4. remaining poor understanding of physics and representation of physics in models; and 5. verification of non-linear, object-like features. Identifying where focus should be placed in improving the models is one main goal I found with the EFP. For example, given some set amount of computational resources, should one very high resolution model be run, or an ensemble of lower resolution models? What observations/assimilation/initial conditions seem most critical? Is a spatially correct forecast of a squall line, but with a timing error of 3 hours a “good” forecast? Is it a better forecast than a poor spatial representation of the squall line (e.g., a blob) with spot-on timing?

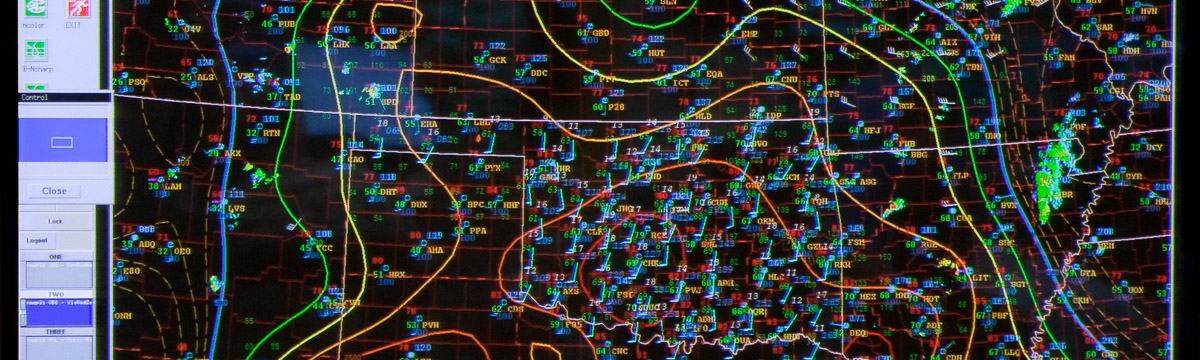

The main challenge we faced was literally a once in 30-year event of poor convective conditions across the CONUS during the week – the first time SPC did not issue a watch for that corresponding week since 1979, the previous low being 10 watches for the same week in 1984! We did have pulse convection and a few isolated supercells in eastern Montana, eastern Wyoming, and western Nebraska, but it was a challenge for the models to even develop (or in some cases overdevelop) convection. After reflecting on our outlooks, verification, and evaluation/discussion of the models, some general conclusions can be drawn:

1. Mesoscale models are a long way from consistently depicting convective initiation, morphology, and evolution. Lou Wicker and Morris Weisman estimate it might be 10-15 years before models are at the level where confidence in their projections would be enough to warn for storms before they develop, presuming the inherent chaos of convection or lack of initial conditions/data assimilation even allows predictability. Progress will surely be made in computational power, better initial conditions (e.g., radar/satellite/land surface), and to some extent model physics, but there will remain a significant role for forecasters in the foreseeable future.

2. Defining forecast quality is extremely difficult when considering individual supercells, MCSs, and other convective features. What may be an excellent 12 hour outlook forecast of severe convection for a County Warning Area could be nearly useless for a hub airport TAF forecast. Timing and location are just as critical as meteorologically correct morphology of convection. Ensembles may be able to distinguish the most likely convective mode, but offer only modest assistance in timing and location.

3. The best verifying models through a 36-hour forecast seem to be those with 3-4 km grid spacing, “lukewarm” started with radar data physically balanced through the assimilation process. The initialization/assimilation scheme seems to have more of an influence than differences in the model physics. Ensemble methods (portraying “paintball splats” of a particular radar echo or other variable threshold), seem to offer some additional guidance beyond single deterministic runs, although it’s very hard to assess the viability/quality of the solutions envelope when making an outlook or forecast. The Method for Object-based Diagnostic Evaluation (MODE) is a developing program for meaningful forecast verification, based on the notion of objects (i.e., a supercell, an MCS) rather than a grid point based scheme.

Overall, the EFP experience was personally rewarding – an opportunity to get away from typical WFO operations and into applied research. The HWT facility and SPC/NSSL staff were fantastic and made for a high-level scientific, yet relaxed, environment. I strongly encourage anyone interested in severe convection from a forecast/outlook/model perspective to consider the EFP in future years.

-Jon Zeitler

NWS Austin/San Antonio, TX