Finally got a chance to type some words (I hope not to many) after getting back to the UK.

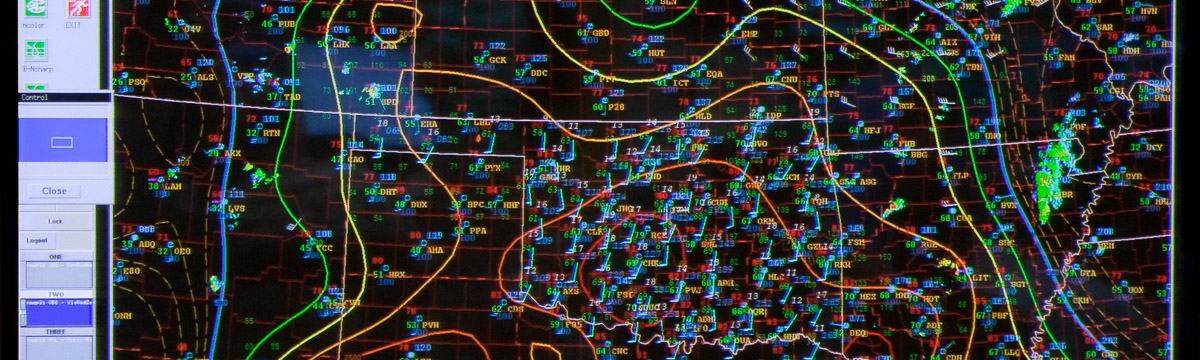

First I would like to say thank you for a very enjoyable week in which I feel that I’ve come away learning a great deal about the particular difficulties of predicting severe convection in the United States and gained more insight into the challenges that new storm-permitting models are bringing. I was left with huge admiration for the skill of the SPC forecasters; particularly synthesising such a large volume of diverse information quickly (observational and model) to produce impressive forecasts, and to see how an understanding of the important (and sometimes subtle) atmosphereic processes and conceptual models are used in the decision-making process.

I was also struck by the wealth of storm-permitting numerical models that were available and how remarkably good some of the model forecasts were, and can appreciate the amount of effort that is required to get so many systems and products up, running and visible.

One interesting discussion we touched on briefly was how probabilistic forecasts should be interpreted and verified. The issue raised was whether it is sensible to verify a single probabilistic forecast.

So if the probability of a severe event is say 5% or 15% does that mean the forecast is poor if there were no events recorded inside those contours? It could be argued that if the probabilities are as low as that, then it is not a poor forecast if events are missed, because in a reliability sense we would expect to miss more than we get. But the probabilities given were meant to represent the chance of an event occurring within 25 miles of a location, so if the area is much larger than that it implies much larger probabilities of something occurring somewhere within that area. So it may be justifiable to assess the forecast of whether something occurred within the warning area as if it is almost deterministic, which may be why it seemed intuitively correct to do it like that in the forecast assessments. The problem then is that the verification approach does not really assess what the forecast was trying to convey.

An alternative is to predict the probability of something happening within the area enclosed by a contour (rather than within a radius around points inside the contour), which would then be less ambiguous for the forecast assessment. The problem then is that the probabilities will vary with the size of the area as well as the perceived risk (larger area = larger probability), which means that for contoured probabilities, any inner contours that are supposed to represent a higher risk (15% contour inside 5% contour), can’t really represent a higher risk at all if they cover a smaller area (which they invariably will!). So at the end of all this I’m still left pondering.

The practically perfect probability forecasts of updraft helicity were impressive for the forecast periods we looked at. Even the forecasts that weren’t so good seemed to appear better when the information was presented in that way and they seemed to enclose the main areas of risk very well.

The character of the 4km and 1km forecasts seemed to be similar to what we have seen in the UK (although we don’t get the same severity of storms of course). The 1km could produce a somewhat unrealistic speckling of showers before organising on to larger scales. Some of the speckling was precipitation produced from very shallow convection in the boundary layer below the lid and was spurious. (We’ve also seen in 1km forecasts what appear to be boundary-layer roles producing rainfall when they shouldn’t, but the cloud bands do verify against satellite imagery even though the rainfall in wrong).

The 4km models appeared to have a delay in initiation in weakly forced situations (e.g. 14th May) which wasn’t apparent in the strongly forced cases (e.g. 13th May). It appeared to me that the 1km forecasts were more likely to generate bow-echoes that propagate ahead (compared to 4km) and on balance this seemed overdone. There was also an occasion from the previous Friday when the 1km forecast generated a MCV correctly when the other models couldn’t, so perhaps it indicates that more organised behaviour is more likely at 1km than 4km – and sometimes this is beneficial and sometimes it is not. It implies that there may be general microphysics or turbulence issues across models that need to be addressed.

It was noticeable that the high-resolution models were not being interpreted literally by the participants – in the sense that it was understood that a particular storm would not occur exactly as predicted and it was the characteristics of the storms (linear or supercell etc) and the area of activity that was deemed most relevant. Having an ensemble helped to emphasise that any single realisation would not be exactly correct. This is reassuring as a danger might be that kilometre-scale models are taken too much a face value because the rainfall looks so realistic (i.e. just like radar).

It seemed to me that the spread of the CAPS 4km ensemble wasn’t particularly large for the few cases we looked at – the differences were mostly local variability, probably because there wasn’t much variation in the larger-scale forcing. The differences between different models seemed greater on the whole. The members that stood out as being most unlike the rest were the ones that developed a faster-propagating bow-echo. This was also a characteristic of the 1km model and was maybe a benefit of having the 1km model as it did give a different perspective, or added to the confusion, however you look at it! One of the main things that came up was the shear volume of information that was available and the difficulty of mentally assimilating all that information in a short space of time. The ensemble products were found to be useful I thought – particularly for guidance in where to draw the probability lines. However, it was thought too time-consuming to be able investigate the dynamical reasons why some members were doing something and another members something else (although forecaster intuition did go a long way) . Getting the balance between a relevant overview and sufficient detail is tricky I guess and won’t be solved overnight. Perhaps 20 members were too many.

The MODE verification system gave intuitively sensible results and definitely works better than traditional point-based measures. It would be very interesting to see how MODE statistics compared with the human assessment of some of the models over the whole experiment.

One of the things I came away with was that the larger-scale (mesoscale rather than local or storm scale) dynamical features appear to have played a dominant role whatever other processes may also be at work. The envelope of activity is mostly down to the location of the fronts and upper-level troughs. If they are wrong then the forecast will be poor whatever the resolution. An ensemble should capture that larger-scale uncertainty.

Thanks once again. Hope the rest of the experiment goes well.

Nigel Roberts